Thanks to Yang You

Large AI models and applications like ChatGPT and GPT-4 have become extremely popular worldwide, serving as a foundation for the technological industrial revolution and the development of AGI (Artificial General Intelligence). Not only are technology giants racing to release new products, but many AI experts from academia and industry are also joining the related entrepreneurial wave. Generative AI is rapidly iterating on a daily basis, continuously improving!

However, OpenAI has not made its models open source, leaving many curious about the technical details behind them.

- How can we stay current and participate in this wave of technology development?

- How can we lower the high cost of building and applying large AI models?

- How can we protect core data and IP from being leaked through third-party large model APIs?

As the leading open-source large AI model solution today, Colossal-AI is the first to open-source a complete RLHF pipeline that includes supervised data collection, supervised fine-tuning, reward model training, and reinforcement learning fine-tuning, based on the LLaMA pre-trained model, and shares ColossalChat, the most practical open-source project that closely resembles the original ChatGPT technical solution!

Open source address: https://github.com/hpcaitech/ColossalAI

It includes the following contents:

- Demo: an interactive demo to try it online without registration or joining the waiting list.

- Training code: Open-source and complete RLHF training code, including 7B and 13B models.

- Dataset: Open-source 104K bilingual dataset of Chinese and English.

- Inference: 4-bit quantized inference for 7 billion-parameter models that only require 4GB GPU memory.

- Model weights: Achieve quick reproduction with only a small amount of computing power on a single server.

- Additional larger models, datasets, and other optimizations will be rapidly updated and added.

Affordable models, powerful capabilities

ColossalChat only requires less than 10 billion parameters to attain bilingual proficiency in English and Chinese, through RLHF fine-tuning on the basis of large language models, achieving comparable results to ChatGPT and GPT-3.5.

For example, a general knowledge quiz

Answering in Chinese

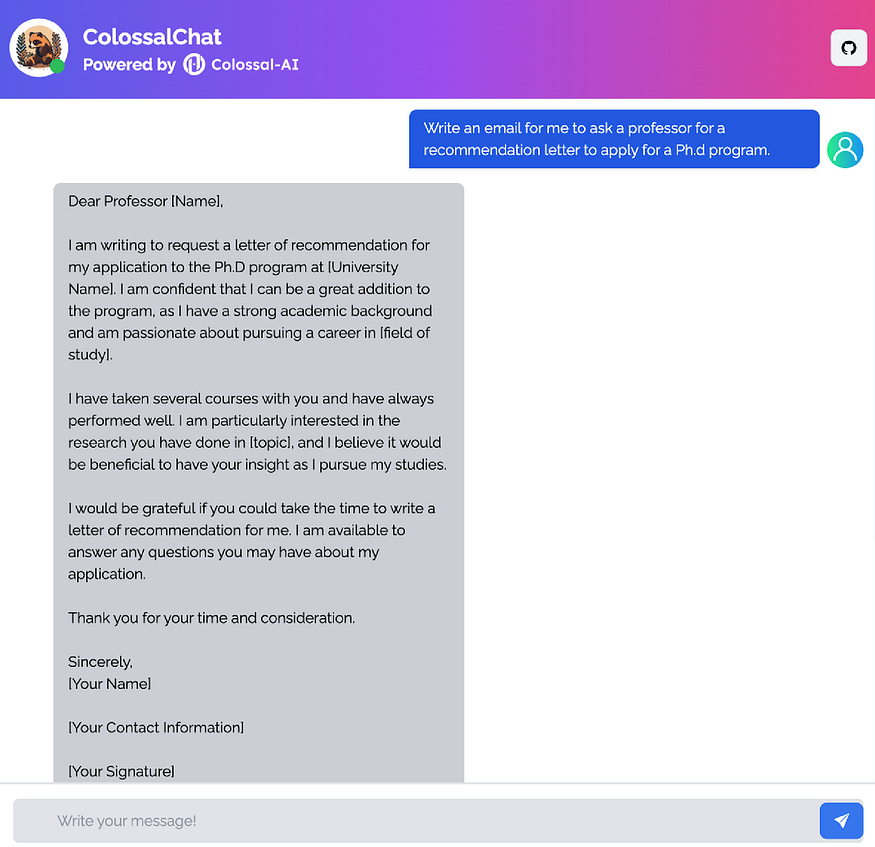

Write an email

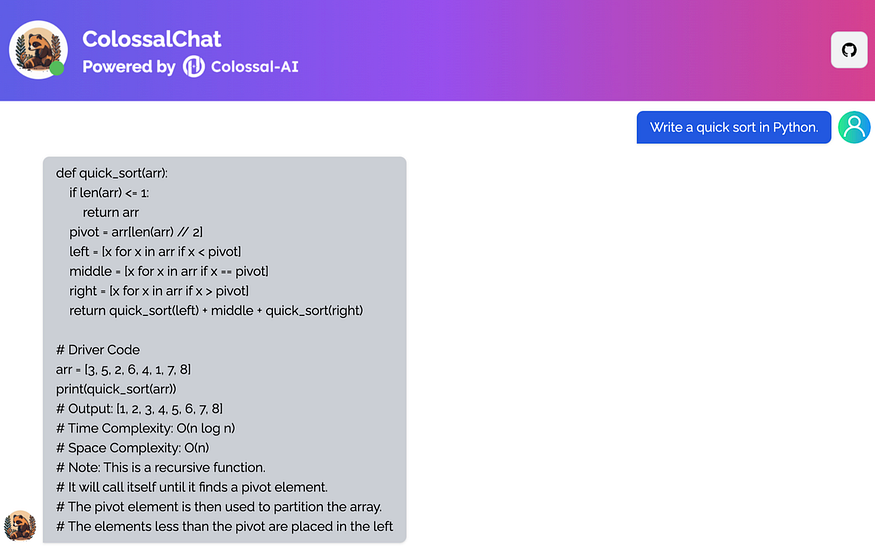

Write an algorithm

Complete ChatGPT cloning solution

Although models in the GPT series, such as ChatGPT and GPT-4, are highly powerful, they are unlikely to be fully open-sourced. Fortunately, the open-source community has been working hard to address this, especially in the most widespread and easy-to-use PyTorch community.

For example, Meta has open-sourced the LLaMA model, which offers parameter sizes ranging from 7 billion to 65 billion. A 13 billion parameter model can outperform the 175 billion GPT-3 model on most benchmark tests. However, since it doesn’t have an instruct tuning stage, its actual generated results are not satisfactory.

Stanford’s Alpaca generates training data in a self-instructed manner by calling OpenAI’s API. With only 7 billion parameters, this lightweight model can be fine-tuned at a fraction of the cost to achieve conversational performance similar to a very large language model like GPT-3.5 with 175 billion parameters.

However, existing open-source solutions can only be considered as supervised fine-tuned models in the first stage of RLHF (Reinforcement Learning from Human Feedback), with subsequent alignment and fine-tuning stages not performed. Additionally, Alpaca’s training dataset is limited to English, which to some extent restricts the model’s performance.

Yet, the impressive effects of ChatGPT and GPT-4 are due to the introduction of RLHF into the training process, which increases the consistency of the generated content with human values.

Based on the LLaMA model and the widespread AI framework PyTorch, ColossalChat is the first practical open-source project that includes a complete RLHF process for replicating ChatGPT-like models, and is the closest project to the original technical route of ChatGPT!

Utilizing PyTorch in the development of ColossalChat is crucial, as it provides a flexible and efficient deep-learning framework. This allows for easier experimentation, rapid prototyping, and seamless integration with other libraries, ultimately enabling ColossalChat to deliver a high-performance, user-friendly conversational AI experience.

Training Dataset Open Source

ColossalChat releases a bilingual dataset comprising approximately 100,000 Q&A pairs in both English and Chinese. The dataset was collected and cleaned from real-life question scenarios on social media platforms, serving as the seed dataset, and was expanded using self-instruct technology, and annotation costs were approximately $900. Compared to datasets generated by other self-instruct methods, this dataset contains more realistic and diverse seed data and encompasses a wider range of topics. The dataset is suitable for both fine-tuning and RLHF training. With the provision of high-quality data, ColossalChat can achieve better dialogue interactions and also support Chinese.

RLHF Algorithm Replication

The RLHF algorithm replication involves three stages:

In RLHF-Stage1, supervised instruct fine-tuning is performed using the datasets mentioned earlier to fine-tune the model.

In RLHF-Stage2, a reward model is trained to assign corresponding scores by manually ranking different outputs for the same prompt, which then supervises the training of the reward model.

In RLHF-Stage3, the reinforcement learning algorithm is being used, which is the most complex part of the training process:

In the PPO part, ColossalChat follows a two-stage process: first, the make experience stage, which uses SFT (Supervised Fine-Tuning), Actor, RM (Reward Model), and Critic models to calculate generated experience and store it in the buffer. Then comes the parameter update stage, which calculates the policy loss and value loss using the experience.

In the PTX part, ColossalChat calculates the cross-entropy loss between the Actor’s output response and the response part of the input corpus. This loss is used to add pre-training gradients to the PPO gradient to maintain the language model’s original performance and prevent forgetting. Finally, the policy loss, value loss, and PTX loss are summed up for backpropagation and parameter update.

Quick Start

ColossalChat has open-sourced the complete code for replicating ChatGPT in three stages based on the LLaMA model at a low cost.

In stage 1, the SFT model is trained:

# Training with a 4-GPU servers

colossalai run — nproc_per_node=4 train_sft.py \

— pretrain “/path/to/LLaMa-7B/” \

— model ‘llama’ \

— strategy colossalai_zero2 \

— log_interval 10 \

— save_path /path/to/Coati-7B \

— dataset /path/to/data.json \

— batch_size 4 \

— accimulation_steps 8 \

— lr 2e-5

In stage 2, the RM is trained:

# Training with a 4-GPU servers

colossalai run — nproc_per_node=4 train_reward_model.py \

— pretrain “/path/to/LLaMa-7B/” \

— model ‘llama’ \

— strategy colossalai_zero2 \

— dataset /path/to/datasets

In stage 3, training with RL algorithm:

# Training with a 8-GPU servers

colossalai run — nproc_per_node=8 train_prompts.py prompts.csv \

— strategy colossalai_zero2 \

— pretrain “/path/to/Coati-7B” \

— model ‘llama’ \

— pretrain_dataset /path/to/dataset

Once the fine-tuned model weights have been obtained, hardware cost for inference can be reduced through quantization, and online inference services can be launched, requiring only a single GPU with approximately 4GB memory to deploy the 7 billion parameter model inference service.

python server.py /path/to/pretrained — quant 4bit — gptq_checkpoint /path/to/coati-7b-4bit-128g.pt — gptq_group_size 128

System Performance Optimization and Development Acceleration

ColossalChat’s ability to quickly follow the complete RLHF process of ChatGPT replication is largely due to the underlying support from the infrastructure of Colossal-AI and related optimization technologies. Under the same conditions, ColossalChat’s training speed can be improved by almost three times compared with FSDP (Fully Sharded Data Parallel) used by Alpaca.

System Infrastructure Colossal-AI

The AI large model development system Colossal-AI provides the foundational support for this project. It can efficiently and quickly deploy AI large model training and inference based on default PyTorch functionality, reducing the cost of large AI model applications. Colossal-AI is developed based on the expertise of Prof. James Demmel, the Distinguished Professor at UC Berkeley, and Prof. You Yang, the President Young Professor at the National University of Singapore. Since its open source release, Colossal-AI has ranked first on the GitHub Trending multiple times with about 20,000 GitHub stars, and has successfully been accepted as the official tutorial for international AI and HPC top conferences such as SC, AAAI, PPoPP, CVPR, and ISC.

Zero+Gemini to Reduce Memory Redundancy

Colossal-AI supports ZeRO (Zero Redundancy Optimizer) to improve memory usage efficiency, enabling larger models to be accommodated at a lower cost, without affecting computing granularity and communication efficiency. The automatic chunk mechanism can further improve ZeRO’s performance by increasing memory usage efficiency, reducing communication frequency, and avoiding memory fragmentation. The heterogeneous memory space manager, Gemini, supports unloading optimizer states from GPU memory to CPU memory or hard disk space to overcome the limitations of GPU memory capacity, expand the scale of trainable models, and reduce the cost of large AI model applications.

Low-cost Fine-tuning of LoRA

Colossal-AI includes the Low-Rank Adaptation (LoRA) method for low-cost fine-tuning of large models. The LoRA method assumes that large language models are over-parameterized and that the parameter change during fine-tuning is a low-rank matrix. Therefore, this matrix can be decomposed into the product of two smaller matrices. During fine-tuning, the parameters of the large model are fixed, and only the parameters of the low-rank matrix are adjusted, significantly reducing the number of parameters required for training and lowering the cost.

Low-cost Quantized Inference

To reduce the cost of inference deployment, Colossal-AI uses GPTQ 4-bit quantized inference. On GPT/OPT/BLOOM models, it can achieve better Perplexity results than traditional RTN (round-to-nearest) quantization techniques. Compared to common FP16 inference, it can reduce memory consumption by 75% while only sacrificing a small amount of throughput speed and Perplexity performance.

For instance, with ColossalChat-7B, using 4-bit quantized inference, the 7 billion parameter model only requires about 4GB of GPU memory to complete short sequence (128-length generation) inference, which can be done on a common consumer-grade GPU like the RTX 3060 with just one line of code.

if args.quant == ‘4bit’:

model = load_quant(args.pretrained, args.gptq_checkpoint, 4, args.gptq_group_size)

If efficient asynchronous offloading technology is used, the memory requirements can be further reduced, enabling larger models to be inferred on lower-cost hardware.

ColossalChat vs. Alpaca

- ColossalChat is the first to open source a complete RLHF pipeline, while Stanford’s Alpaca has not implemented RLHF, which means they didn’t include Stage 2 and Stage 3.

- ColossalChat demonstrates superior performance and broader conversational coverage. Its significant improvements are due to the utilization of a larger and higher quality dataset, along with the implementation of reinforcement learning to align responses more closely with human-like answers.

- ColossalChat’s training process incorporates various system optimizations from Colossal-AI, resulting in faster training times of about three times compared to Alpaca when using the same dataset and model size. This enables researchers and small to medium-sized enterprises to independently train and deploy their own chatbots.

- The ColossalChat team has collected a larger dataset for training, consisting of approximately 24 million tokens for English and 30 million tokens for Chinese, resulting in a total of around 54 million tokens. Notably, ColossalChat collected 6 million tokens for English and 18 million tokens for Chinese independently.

The following are some of the performance comparisons between ColossalChat and Alpaca in language dialogues.

Limitation

Although RLHF has been further introduced, there is still room to improve the actual performance in some scenarios due to the limited computing power and data set.

Collaboration

Luckily, unlike previous large AI models and cutting-edge technologies that were monopolized by only a few tech giants, open-source communities and startups such as PyTorch, Hugging Face, and OpenAI have also played a key role in this wave. Drawing on the successful experience of the open-source community, Colossal-AI welcomes all parties to participate in building together and embracing the era of large models!

- You can post an issue or submit a pull request (PR).

- Join the Colossal-AI WeChat or Slack group to communicate with the team and other users.

- Send your official proposal to email youy@comp.nus.edu.sg

Acknowledgments

ColossalChat owes a great deal of gratitude to many existing works and outstanding organizations. The incredible Stanford Alpaca project has been a source of inspiration. The Self-Instruct research paper provides the foundation for the powerful capabilities of small datasets. Accurate post-training quantization comes from GPTQ. Thanks to Meta AI Research for releasing the LLaMA models, Meta’s PyTorch, and OpenAI for paving the way for the most powerful AI.

Disclaimer

Similar to Stanford Alpaca, we emphasize that ColossalChat is a contribution to the open-source community, which is intended solely for academic research purposes and any commercial use is prohibited:

- ColossalChat is built upon LLaMA, which is licensed for non-commercial use only.

- The instruction data derived from OpenAI’s model API, and the terms of use for this data prohibit the development of competing models.

- ColossalChat, like other large language models, may exhibit several common deficiencies, including hallucination, toxicity, and bias.

Reference

[1] Wang, Yizhong, et al. “Self-Instruct: Aligning Language Model with Self Generated Instructions.” arXiv preprint arXiv:2212.10560 (2022).

[2] Touvron, Hugo, et al. “LLaMA: Open and efficient foundation language models.” arXiv preprint arXiv:2302.13971 (2023).

[3] Rohan, Taori, et al. “Stanford Alpaca: An Instruction-following LLaMA model.” arXiv preprint arXiv:2302.13971 (2023).

[4] Hu, Edward J., et al. “Lora: Low-rank adaptation of large language models.” arXiv preprint arXiv:2106.09685 (2021).

[5] Frantar, Elias, et al. “GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers.” arXiv preprint arXiv:2210.17323 (2022).

[6] OpenAI. 2022. ChatGPT. https://openai.com/blog/chatgpt

[7] Rajbhandari, Samyam, et al. “Zero: Memory optimizations toward training trillion parameter models.” SC20: International Conference for High Performance Computing, Networking, Storage and Analysis. IEEE, 2020.